How to simultaneously write to shared memory with multiple processes

Most of our modern day computers have more than one processing unit. They are often referred to as cores. For a developer, effectively using multiple cores simultaneously to speed up program execution by parallel processing comes with challenges. One of them is changing the value of a variable in shared memory.

In this blog post I introduce one of the two most common methods to overcome this problem by synchronizing access to shared memory: fork and semaphores. (The other option would be pthreads and mutexes.)

Parallel processing

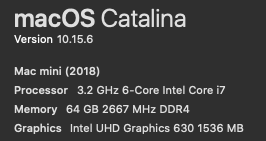

Most computers nowadays come with a multi-core processor. In fact, the Mac that I’m using to write this blog on comes with 6 cores. At least that’s what the system configuration menu tells me:

Does this mean that all software runs 6 times as fast? Unfortunately not. Only a small subset of all software effectively leverages the enourmous computing power that modern laptops and desktops possess. Why? Writing software that executes multiple tasks in parallel is significantly more complex than solving the same problem sequentially.

How many cores does my computer have?

At this point, you may want to know how many cores or processing units your computer is equipped with. To retrieve the number of processing units you can use the following ‘C’ code

1long nproc = sysconf(_SC_NPROCESSORS_ONLN);

which, in my case, returns ... 12. Why the difference to the 6 cores that were reported by the system configuration above? Hyperthreading. 6 physical cores inside the computer are presented as 12 virtual cores to the operating system.

Multiprocessing and multithreading

The 2 main technical options for a developer to implement parallel processing are multiprocessing and multithreading. Both allow to execute multiple tasks simultaneously by spinning-off separate processes or threads that run independently of the main process.

Beware that in both methods tasks really run “simultaneously”, or in parallel, only if there is more than 1 processing unit. If you run multiple processes or threads on a single core computer, all tasks are in the end still executed sequentially, one after the other, by the same (aka. only) processor without any gain in performance.

Also, be aware that task scheduling is done by the operating system, not by the application. Thus, as a developer you cannot decide what task, no matter whether it’s a process or a thread, is run by what core. In fact, if you run multiple tasks there is no guarantee at all that each of these tasks will actually run on a different processing unit. Fortunately, modern operating systems allocate the workload evenly, hence in practice your processes are typically allocated to different processing units.

Challenges of parallel processing

To effectively use multiple cores in an application, the first thing a developer needs to do is to adapt the application logic or flow. Simply running the same algorithm on a multiple cores machine will not result in any performance improvement. Further down below I will provide an example for such a change in application logic.

Second, you will need your multiple processes or threads to exchange information or to communicate with each other. This is called IPC or inter process communication. The most common techniques to support IPC are typically either based on messaging or on memory. Messaging based techniques include SIGNALs and PIPEs. Information is sent as messages from one task to another.

In this post I want to explore the memory based approach. Instead of multiple tasks exchanging messages they communicate by writing information to memory that can be read by other tasks. In most cases this method is faster than messaging via PIPEs or SIGNALs.

Yet, this method comes with one big caveat. If multiple tasks write to the same shared memory at the same time they may conflict with each other. This is called a race condition because the tasks run in parallel, like multiple runners in a race, and you cannot predict which runner wins, i.e. you don’t know the order in which these tasks are executed.

To overcome this problem of race conditions we therefore need to synchronize any write access to shared memory. Let’s look at a simple example to demonstrate this:

A simple problem – solved with a single process

Let’s say we have a large array of random integers between 0-9 and we want to calculate the frequency of each digit. How many 1s, 2s, 3s…. etc are there in the array. It’s a very simple problem that is solved in 2 steps.

First, create the array with the random numbers:

1 // create a large array of random numbers between 0-9

2

3 long arrlen = 100000; // arbitrary length of the array

4 int ndigits = 10; // there are 10 digits [0-9]

5

6 long *arr = malloc(arrlen * sizeof(long));

7

8 for (int i=0; i<arrlen; i++) arr[i] = rand()%ndigits;

Second, count the frequencies per digit:

1 // count the frequency of each digit in the array

2

3 long *freq_sp = calloc(ndigits, sizeof(long)); // frequency counter per digit [single process]

4

5 for (long i=0; i<arrlen; i++) {

6 freq_sp[arr[i]]++;

7 usleep(100);

8 }

Since this is a very simple calculation, it would take less than 1 second to complete. For the purpose of this demo, I simulate a more complex algorithm that takes longer to complete by simply adding a usleep pause of 0.1 milliseconds into the loop.

At the end, we want to confirm the calculation. The sum of all frequencies should match arrlen. Consider this a checksum that we want to refer to further later.

Let’s also time the calculation and output the number of seconds the whole algorithm took to complete:

1 long arrsum = 0;

2

3 for (int i=0; i<ndigits; i++) arrsum += freq_sp[i];

4

5 printf("Sum of all frequencies: %'14ld (%.0f sec)\n",arrsum,difftime(time(NULL), start_time));

If you put the pieces of code above together and run it your output should look similar to this:

1Sum of all frequencies: 100,000 (13 sec)

A single process took about 13 seconds to complete this calculation. (The time on your computer may vary. But the time obviously will always be 10+ seconds since we added 100,000 times a pause of 0.1 milliseconds which translates into 10 seconds.)

Solving the problem with multiple processes

Now, let’s see whether we can speed-up this calculation if we use multiprocessing.

As I mentioned above, the first thing to do for multiprocessing is to adapt the logic or flow of the application. We need to find instructions that can be effectively run in parallel without affecting the result.

In our simple example we run a for loop from 1 to arrlen. If multiple processes all were to run this same loop we would not only get a different result but we would also likely end up with an even slower algorithm.

Instead, we split the array into segments and let each process calculate the digit frequencies of the numbers in that particular segment. So, if we assume that we want to run 2 processes in parallel, then process #1 would work on segment [0..49,000] and process #2 would work on segment [50,000..99,999]. The same logic applies to any other number of processes that you may want to run in parallel. The more processes, the more segments.

The resulting code for this new logic should look something like this:

1 int nproc = 2; // the number of processes to run simultaneously

2

3 for (int p=0; p<nproc; p++){

4

5 // define a segment that each process works on independently

6 long from_idx = (p==0) ? 0 : p * (arrlen/nproc);

7 long to_idx = (p==nproc-1) ? arrlen : (p+1) * (arrlen/nproc);

8

9 for (long i=from_idx; i<to_idx; i++) {

10 freq_mp[arr[i]]++; // increment frequency counter per digit [multi process]

11 usleep(100);

12 }

13 }

So, almost there. But something is still missing. Above code is still executed by a single process. How to create 2 or more processes that run in parallel, and then make each process work on a different segment of the array?

The 2 most common techniques to run multiple tasks in parallel are fork and pthread. While the former basically clones your whole program (aka. process), the latter spins off a new thread within the current process. Therefore, when we’re using fork we’re talking about multiprocessing, and when we’re using pthread we’re talking about multithreading.

I won’t go into much detail about the difference between the two. There are abundant online resources on this topic. Put simply, you can think of the following analogy:

Think of your program as a worker. fork clones this worker and gives you 2 independent workers that both handle the workload in parallel. pthread on the other side grows your worker a new pair of arms Each pair works independently but is still part of the same body and controlled by the same brain.

Multiprocessing using fork

Citing from its man page fork “creates a new process by duplicating the calling process. The new process is referred to as the child process. The calling process is referred to as the parent process.”

I recommend reading the man page and doing some of the many online tutorials on fork before you continue. The usage of fork and understanding the resulting program logic can be a bit tricky in the beginning, especially if you fork multiple times.

Below code shows a useful generic structure that can be widely applied whenever you want to do multiprocessing. It runs any task nproc times in parallel by creating nproc child processes:

1 int nproc = 6;

2

3 int pid;

4 for (int n=0; n<nproc; n++){

5

6 if ((pid = fork()) < 0) {perror("fork");exit(1);}

7 if (pid==0) {

8

9 // code that shall be executed by each child process

10

11 printf("Hello! I am process [%d].\n", getpid());

12

13 /// ...

14

15

16 _exit(0);

17 }

18

19 }

20

21 // wait for all child processes to finish

22 while(wait(NULL) != -1);

23

24 // parent process proceeds....

Please note that at the end the parent process waits until all child processes have exited and only then resumes the program flow. Not waiting for child processes to finish is often a source of errors.

The wait command also gives you the opportunity to bring the application logic back into a single process. Often this is needed, for example when only a certain part of your application logic can be parallelized. When this part is completed, you want to proceed sequentially. Using

1while(wait(NULL) != -1);

allows you to do so.

Also, beware that in above generic code structure nproc represents the number of processes, not the number of processors. You could run more processes than there are actual processing units.

Simple problem using multiprocessing

Now, let’s put it all together. Let’s apply the above multiprocessing code structure to our problem of calculating the frequencies of random digits:

1 int nproc = 2; // the number of child processes to run simultaneously

2

3 start_time = time(NULL);

4

5 int pid;

6 for (int p=0; p<nproc; p++){

7

8 if ((pid = fork()) < 0) {perror("fork");exit(1);} // exit if fork was not successful

9

10 // define what each child process will do

11 if (pid==0) {

12

13 // define a segment that each child process works on independently

14 long from_idx = (p==0) ? 0 : p * (arrlen/nproc);

15 long to_idx = (p==nproc-1) ? arrlen : (p+1) * (arrlen/nproc);

16

17 // sum up the frequencies in this segment of the array

18 for (long i=from_idx; i<to_idx; i++) {

19 freq_mp[arr[i]]++; // increment frequency counter per digit [multi process]

20 usleep(100);

21 }

22

23 _exit(0);

24 }

25

26 }

27

28 // wait for all child processes to finish

29 while(wait(NULL) != -1);

30

31 // calculating the sum of all frequencies to check

32 arrsum = 0;

33 for (int i=0; i<ndigits; i++) arrsum += freq_mp[i];

34

35 printf("Sum of all frequencies: %'14ld (%.0f sec)\n",arrsum,difftime(time(NULL), start_time));

If you add this code to your previous code and run it alltogether you should see something like this:

1Sum of all frequencies (single process) : 100,000 (13 sec)

2Sum of all frequencies (multiprocessing): 0 (6 sec)

The second calculation, using multiprocessing, required only 6 seconds versus 13 seconds for the single process calculation. However, the sum of the frequencies, which serves as our checksum to verify that our calculation worked correctly, now returns a 0 instead of the expected 100,000. Why?

Shared memory for IPC

In the above code I purposely didn’t include the definition of the freq_mp variable. If you defined it using malloc, same as we did previously for a single process, you will end up with this wrong result. The problem is that once you forked the current process, each resulting child process will have a separate copy of all variables of the parent process. If the child process changes any of those variables, those changes have no impact on the original variable in the parent process. So when the child processes were incrementing freq_mp those changes did not affect the copy of freq_mp in the parent process which however is the one that we sum up at the end.

To solve this problem, we need to define freq_mp as shared memory that can be accessed, and in particular written to, by both parent and child process. I do this with mmap:

1 // define a shared memory to store the frequency counters

2

3 long *freq_mp = mmap(0, ndigits * sizeof(long), PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0);

4 if (freq_mp == MAP_FAILED) {perror("mmap");exit(1);}

Now, all child processes can write to freq_mp and their changes can be read by the parent process. Actually, not only by the parent process but also by all other child processes.

When the shared memory is not used anymore you don’t free it as you would for malloc allocated variables. Instead, the corresponding method for shared memory created with mmap is munmap:

1 if (munmap(freq_mp, ndigits * sizeof(long)) == -1) {perror("munmap");exit(1);}

Therefore, make sure you add the above at the end of your code.

Great. So let’s see what happens. Let’s run the code again, using multiprocessing and this time using shared memory. If you do so, you will see an output that looks like this:

1Sum of all frequencies (single process) : 100,000 (13 sec)

2Sum of all frequencies (multiprocessing): 99,988 (6 sec)

Wow! What is this?! Now, our multiprocessing did calculate the frequencies, however the sum of those frequencies mystically is not correct. The sum that was calculated by multiple processes in parallel is not equal to the sum that a single process calculated. (In your own testing you may see a smaller or larger difference, or in fact, you may see no difference at all. But, in general, very likely you will see a difference, and the larger the array the larger the difference.)

What happened?

Remember race conditions?

The problem with the above code is that multiple processes try to write to the same shared memory at the same time. This causes some of the writes getting lost or cancelled out.

To avoid this problem we need to synchronize the writes. Without synchronization there is no guarantee that all writes are successfully completed.

Synchronizing memory writes for multiprocessing

Synchronizing writes means that if one process writes to a variable in shared memory, this process locks the variable first so that no other process can write to it. After the writing is complete, the process unlocks or releases the shared memory and only then another process will be able to write to it. In short, what we need is a mutually exclusive write.

The 2 most common techniques to synchronize memory access are semaphores and mutexes. You can think of both as some low-level registers that can be set to a certain value, and then depending on that value either allow or block access to a resource.

Semaphores are more powerful, can be used across processes, and support incrementing values instead of simply setting a binary 0 or 1. They behave like ‘counting locks’ that only open if their value is 1. Semaphores are defined in sets of arbitrary length. Each set is identified by its unique ID.

In our example, I use ‘semaphores’ as simple on/off switches, similar to mutexes. Altogether, we need the following 5 steps to use semaphores effectively:

Step 1: Define

1int nsems = 1; // number of semaphores in this set

2int semid = semget(IPC_PRIVATE, nsems, 0666 | IPC_CREAT);

This will create a new semaphore set with 1 semaphore.

Step 2: Initialize

1semun_t semun = {.val = 1}; // initial semaphore value => 1 = released/unlocked

2semctl(semid, 0, SETVAL, semun);

This will set the initial value of the semaphore to 1, which means it’s unlocked.

Step 3: Lock

1struct sembuf sb = {.sem_num = 0, .sem_op = 0, .sem_flg=0};

2

3sb.sem_op = -1; // lock the semaphore

4semop(semid, &sb, 1);

Before any process writes to the shared variable, use semop to lock the semaphore.

This will automatically block other processes that attempt to write to this variable. Those processes are made wait until the semaphore is unlocked.

Step 4: Release/Unlock

1struct sembuf sb = {.sem_num = 0, .sem_op = 0, .sem_flg=0};

2

3sb.sem_op = 1; // release/unlock the semaphore

4semop(semid, &sb, 1);

After a process has completed writing to the shared variable, use semop to unlock the semaphore.

Step 5: Remove

1semctl(semid, 0, IPC_RMID);

Whenever a semaphore set is not needed anymore it should be properly removed. This cleanup process is important because semaphores continue to exist even when the calling program is completed.

There is also a limit to the amount of semaphores that can be created and that can exist at any given time. Hence, if you don’t remove garbage you may at some point reach the given limit of your computer which makes any subsequent call to semget fail.

You can see a list of open semaphores via the console using:

1$ ipcs -s

The limit of the maximum number of semaphores that can exist at any given time can be checked via

1long max_nsem = sysconf(_SC_SEM_NSEMS_MAX);

or in the console via

1$ ipcs -S

2

3

4 semmap: 30 (# of entries in semaphore map)

5 semmni: 0 (# of semaphore identifiers)

6 semmns: 0 (# of semaphores in system)

7 semmnu: 0 (# of undo structures in system)

8 semmsl: 87381 (max # of semaphores per id)

9 semopm: 5 (max # of operations per semop call)

10 semume: 10 (max # of undo entries per process)

11 semusz: 32 (size in bytes of undo structure)

12 semvmx: 32767 (semaphore maximum value)

13 semaem: 16384 (adjust on exit max value)

on Mac OS or via

1$ cat /proc/sys/kernel/sem

on Linux.

Synchronizing memory writes with semaphores

Now, let’s put all of the above together and use semaphores to ensure that our multiprocessing calculation ends up with the correct frequency counts.

1 // create a very large array of random numbers between 0-9

2

3 long arrlen = 100000; // arbitrary length of the array

4 int ndigits = 10; // there are 10 digits [0-9]

5 long *arr = malloc(arrlen * sizeof(long));

6 for (int i=0; i<arrlen; i++) arr[i] = rand()%ndigits;

7

8

9 // PART I -- counting frequencies with a single processes

10

11 time_t start_time = time(NULL);

12 long *freq_sp = calloc(ndigits, sizeof(long)); // frequency counter per digit (single process)

13 for (long i=0; i<arrlen; i++) {

14 freq_sp[arr[i]]++;

15 usleep(100);

16 }

17

18

19 // calculate the sum of all frequencies to check that the calculation is correct

20

21 long arrsum = 0;

22 for (int i=0; i<ndigits; i++) arrsum += freq_sp[i];

23 printf("Sum of all frequencies (single process) : %'14ld (%.0f sec)\n",arrsum,difftime(time(NULL), start_time));

24

25 free(freq_sp);

26

27

28 // PART II -- counting frequencies with multiple processes

29

30

31 // define a shared memory to store the frequency counters

32 long *freq_mp = mmap(0, ndigits * sizeof(long), PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0);

33 if (freq_mp == MAP_FAILED) {perror("mmap");exit(1);}

34

35 // define a semaphore set for synchronizing write access to shared memory

36 int nsems = 1; // number of semaphores in this set

37 int semid = semget(IPC_PRIVATE, nsems, 0666 | IPC_CREAT);

38

39

40 // initialize the semaphore

41 semun_t semun = {.val = 1}; // initial semaphore value => 1 = released/unlocked

42 semctl(semid, 0, SETVAL, semun);

43

44 struct sembuf sb = {.sem_num = 0, .sem_op = 0, .sem_flg=0}; // struct to feed into semaphore functions

45

46 int nproc = 2; // the number of child processes to run simultaneously

47

48 start_time = time(NULL); // reset the time counter

49

50 int pid;

51 for (int p=0; p<nproc; p++){

52

53 if ((pid = fork()) < 0) {perror("fork");exit(1);} // exit if fork was not successful

54

55 // define what each child process will do

56 if (pid==0) {

57

58 // define a segment that each child process works on independently

59 long from_idx = (p==0) ? 0 : p * (arrlen/nproc);

60 long to_idx = (p==nproc-1) ? arrlen : (p+1) * (arrlen/nproc);

61

62 // sum up the numbers in this segment of the array

63 for (long i=from_idx; i<to_idx; i++) {

64

65 sb.sem_op = -1; // lock the semaphore

66 semop(semid, &sb, 1);

67

68

69 freq_mp[arr[i]]++; // increment frequency counter per digit [multi process]

70 usleep(100);

71

72 sb.sem_op = 1; // release/unlock the semaphore

73 semop(semid, &sb, 1);

74

75

76 }

77

78 _exit(0);

79 }

80

81 }

82

83 // wait for all child processes to finish

84 while(wait(NULL) != -1);

85

86

87 // calculate the sum of all frequencies to check that the calculation is correct

88

89 arrsum = 0;

90 for (int i=0; i<ndigits; i++) arrsum += freq_mp[i];

91

92 printf("Sum of all frequencies (multiprocessing): %'14ld (%.0f sec)\n",arrsum,difftime(time(NULL), start_time));

93

94

95 // cleanup

96

97 if (semctl(semid, 0, IPC_RMID) == -1) {perror("semctl remove");exit(1);} // remove semaphore

98

99 if (munmap(freq_mp, ndigits * sizeof(long)) == -1) {perror("munmap");exit(1);} // unmap shared memory

100

101 free(arr);

Running above code will output something like this:

1Sum of all frequencies (single process) : 100,000 (13 sec)

2Sum of all frequencies (multiprocessing): 100,000 (13 sec)

Great. The semaphores work. Write access to shared memory is now synchronized and all processes' writes are successfully completed. However, please note that this synchronization came at a cost. All of the performance gain achieved via multiprocessing seems to be lost. Now, multiprocessing takes the same 13 seconds as did a single process.

Memory synchronization comes at a cost

That’s disappointing, isn’t it? It was for me when I first noticed the enormous negative performance impact semaphores can have. Every time a lock is set or unset, a low-level call to the OS is made which is slow. Let’s understand in more detail what’s happening and whether there is any option to improve performance.

Based on our current logic, both/all processes lock the same semaphore every time they need to write. This means that the probability that a process that wants to write to the shared variable needs to wait until the semaphore is unlocked is very high. Basically, the probability is 100% because all processes use the same lock.

Therefore, if we were to use multiple locks, for example a different lock for each digit (remember, we want to count the frequencies per digit 0-9) then the probability of encountering locked memory would decrease to only 10% since we have 10 different locks.

As semaphores are defined in sets this can be implemented very easily. We need to make 3 changes:

First, when we define the semaphore we make the size of the set equal to the number of digits, i.e. 10.

1 int nsems = ndigits; // number of semaphores in this set

2 int semid = semget(IPC_PRIVATE, nsems, 0666 | IPC_CREAT);

With this change, the same semid now refers to a set of 10 semaphores instead of a single semaphore.

Second, we need to initialize all of the semaphores in the set. Therefore, we put the semctl initialization into a loop. (Alternatively, you could also use the SETALL option instead of a loop. Please refer to the semctl man page.)

1 // semctl(semid, 0, SETVAL, semun);

2 for (int i=0; i<ndigits; i++) semctl(semid, i, SETVAL, semun);

Third, when we lock or release the semaphore we need to define which semaphore in the set we refer to. This is done by adding

1sb.sem_num = arr[i];

as follows:

1 for (long i=from_idx; i<to_idx; i++) {

2

3 sb.sem_num = arr[i]; // pick the semaphore in the set

4

5 sb.sem_op = -1; // lock the semaphore

6 semop(semid, &sb, 1);

7

8 freq_mp[arr[i]]++; // increment frequency counter per digit [multi process]

9 usleep(100);

10

11 sb.sem_op = 1; // release/unlock the semaphore

12 semop(semid, &sb, 1);

13

14 }

If you apply all of these changes, i.e. you now use 10 semaphores instead of 1, and run above code again you should see something like this:

1Sum of all frequencies (single process) : 100,000 (13 sec)

2Sum of all frequencies (multiprocessing): 100,000 (7 sec)

Voila! The multiprocessing result is still correct (i.e. memory synchronization via semaphores works) and we see some performance improvement from multiprocessing compared to a single process.

Conclusion

Multiprocessing requires a completely new consideration, or redesign, of the application logic. Processes need to communicate with each other (IPC) to share the result of their work. When shared memory is used for IPC, memory access needs to be synchronized to avoid unpredictable outcomes caused by race conditions. Synchronization, no matter whether via semaphores or mutexes, comes at a cost that often negatively compensates any performance gain from multiprocessing. To reduce the cost a developer has to carefully weigh different options for the design of semaphores or mutexes.

Sources and further references:

- Beej’s Guide to Unix IPC

- POSIX thread (pthread) libraries

- Threads: Basic Theory and Libraries

- Mutexes and Semaphores Demystified

.